Hello

We are in the process of testing to migrate from Openstack to Opennebula as the licence fees for our VMware Integrated Openstack have changed. Now VMware is charging for VIO.

Anyway, We have SAN via NFS that we want to use.

We have installed the latest version and have our dev environment running on one server.

It is our intention to have the frontend on a dedicated server and initially setup on node on a dedicated server connected via a private network.

As our NFS has a huge amount of storage and is connected via 10Gbit networking we wish to use that for the storage of our Virtual Machines.

Naturally it would be nice to use local storage for at least something however for live migrations it is imperative we use shared storage, hence the NFS.

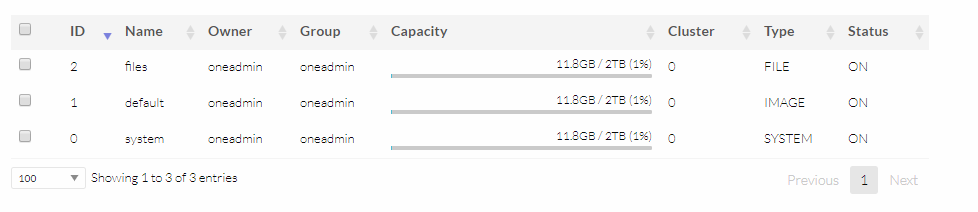

We are somewhat lost about the various datastore types and have tried mounting the NFS however changing the location via the oned.conf does not work as we still get errors.

So, would someone be able to shed some light on how we connect the NFS to use for VM storage and also provide some advice on using some of the local storage for something else if this is at all possible.

I would be more than happy to pay for someone to provide some personal guidance in setting this up.

).

).